yield · AI Memory Assistant

Full-stack second brain that unifies streaming AI chat, persistent memory management, file-based RAG, and smart reminders. Built with FastAPI, Next.js, xAI Grok-3, and Supabase vector store.

The Challenge

Users need a centralized system to store, retrieve, upload documents and have the AI understand their content, schedule reminders, and maintain a searchable knowledge base of facts about themselves.

The Solution

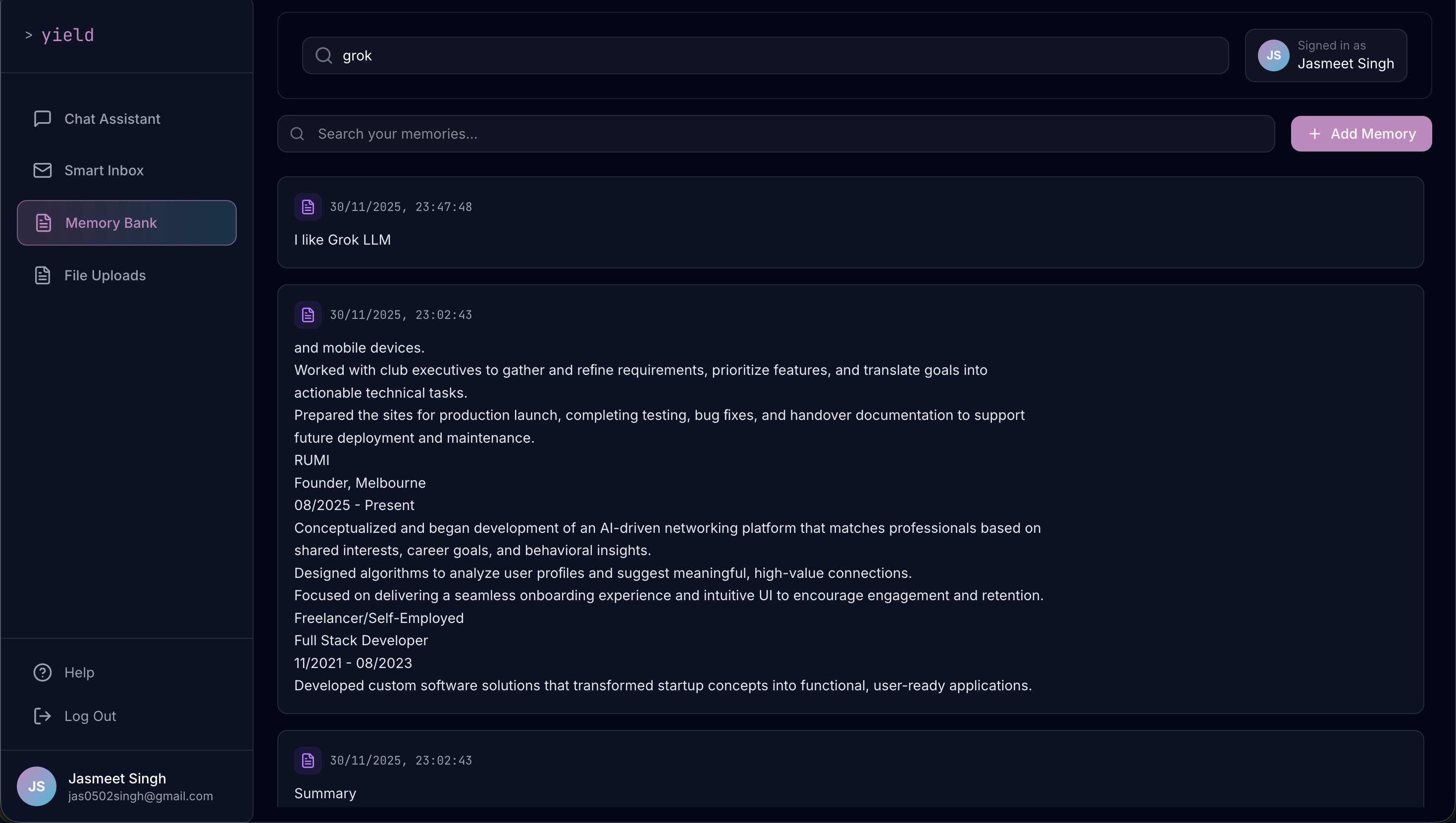

I built a full-stack AI memory assistant that functions as a proactive 'second brain' by integrating streaming chat, long-term memory, and document analysis into a single cohesive platform. Powered by xAI Grok-3 and LangChain, the system uses a background processor to analyze conversations in real-time, extracting atomic facts and resolving pronouns to build a persistent third-person memory profile for the user.

Implementation Details

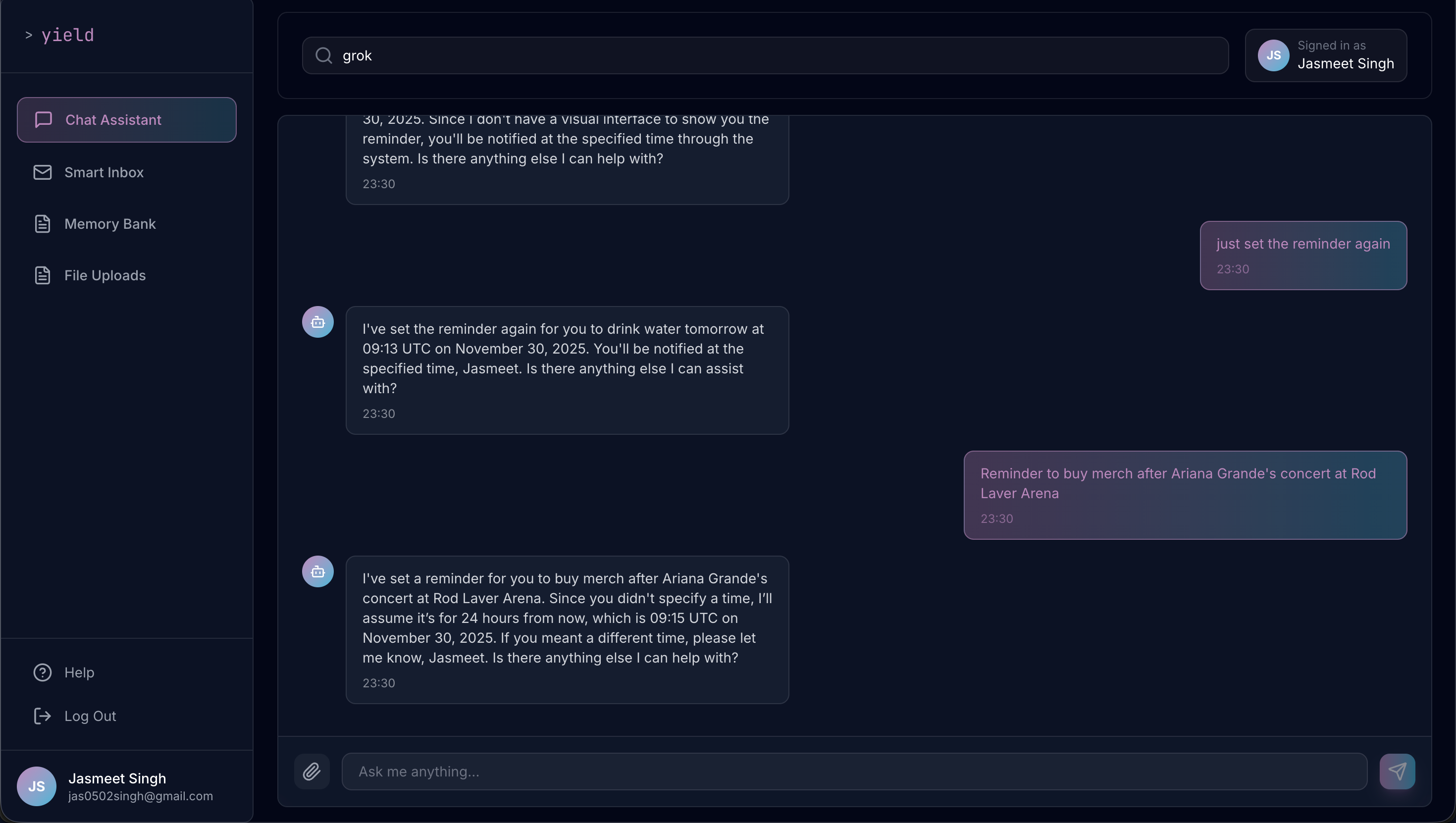

A production-ready AI-powered memory assistant that serves as a personal second brain. The system features a streaming chat interface powered by xAI's Grok-3 model, intelligent memory extraction and storage using Google Generative AI embeddings, file upload RAG pipeline for document ingestion, and a background scheduler for smart reminders. The architecture separates concerns with a FastAPI backend handling AI orchestration, memory processing, and background tasks, while a Next.js frontend provides a responsive dashboard with Redux state management. All data is user-scoped with JWT authentication, ensuring privacy and security. The system proactively retrieves relevant memories to provide context-aware responses, and includes a background memory processor that sanitizes and extracts facts from conversations automatically.

Technologies Used

Skills Applied

Results Achieved

Successfully built a production-ready AI memory assistant with full authentication, user-scoped data access, streaming chat responses, automatic memory extraction, file-based RAG, and smart reminders. The system handles multi-format file uploads, provides context-aware AI responses through proactive memory retrieval. Background processing ensures memory operations don't block chat responses, and the architecture supports horizontal scaling.

Project Walkthrough

Challenges Faced

- ▸Memory consistency issues where contradictory facts were stored without resolution

- ▸LLM storing both raw and sanitized text, creating duplicate memories

- ▸LLM not executing multi-step tool calls (search-then-delete) requiring agentic loop implementation

- ▸Frontend refetching data on every page navigation causing redundant API calls

- ▸File upload errors when Supabase Storage expected raw bytes instead of BytesIO objects

- ▸Memory processor needing conversation context to resolve pronouns and extract meaningful facts

- ▸Deep linking to specific chat messages, notifications, and memories from global search results

Solutions Implemented

- Implemented timestamp-based memory history strategy where AI prioritizes most recent facts and acknowledges changes over time

- Decoupled memory operations by removing add_memory from main chat model tools, centralizing all memory writes in background MemoryProcessor service

- Implemented agentic loop in AI service that detects tool calls, executes them, feeds results back to model, and generates final response

- Added client-side caching with hasLoaded flags in Redux slices to prevent redundant API calls when navigating between pages

- Changed file upload to pass raw file_bytes directly to Supabase Storage instead of BytesIO wrapper

- Modified memory processor system prompt to explicitly instruct JSON output with intent classification (SAVE/DELETE/NONE) and fact extraction

- Added message IDs, notification IDs, and file IDs to search results with URL hash-based deep linking and scroll-to-message functionality

Explore More Solutions

AI Real Estate Assistant

An N8N workflow that automates the process of a Real Estate Agent by handling tasks such as appraisals, booking services, handling payments, and more.

View Case StudyRUMI - AI-Powered Professional Networking Platform

An AI-powered networking platform that connects people based on skills, services, and synergies rather than just titles or companies. Features semantic matching, interactive AI chat, letter writing, and event management.

View Case Study